Optimizing Tool Retrieval in RAG Systems: A Balanced Approach

RAG Course

This is based on a conversation that came up during office hours from my RAG course for engineering leaders. There's another cohort that's coming up soon, so if you're interested in that, you can sign up here.

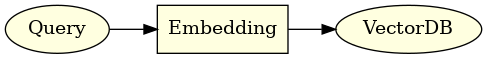

When it comes to Retrieval-Augmented Generation (RAG) systems, one of the key challenges is deciding how to select and use tools effectively. As someone who's spent countless hours optimizing these systems, many people ask me whether or not they should think about using retrieval to choose which tools to put into the prompt. What this actually means is that we're interested in making precision and recall trade-offs. I've found that the key lies in balancing recall and precision. Let me break down my approach and share some insights that could help you improve your own RAG implementations.

In this article, we'll cover:

- The challenge of tool selection in RAG systems

- Understanding the recall vs. precision tradeoff

- The "Evergreen Tools" strategy for optimizing tool selection